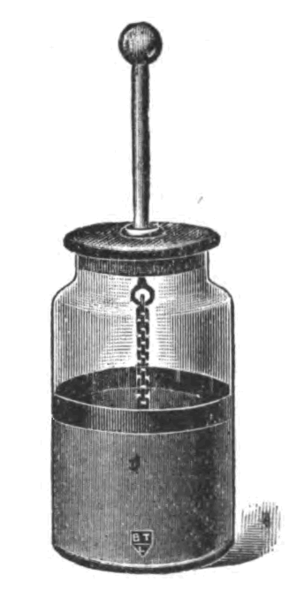

The invention of the Leyden Jar marked a significant moment in this history of electrical engineering. The Leyden Jar can be thought of as the first electrical capacitor – a device that stores and releases electrical energy.

The Invention of the Leyden Jar

During the 18th century the mysterious phenomenon of electricity was becoming a hot topic among learned men of science. Electricity could only be created and observed in the moment. One of the mysteries to be solved was whether electricity could be stored for later use and how to accomplish it. The invention that solved the mystery became known as the Leyden jar, named after the city of an early inventor of the device.

The Leyden Jar is typically credited to two individuals, who independently came up with the same idea. In Germany, Ewald Georg von Kleist was experimenting with electricity. He was attempting to store electricity with a medicine bottle filled with water and a nail inserted through a cork stopper. He charged the jar by touching the nail with an electrostatic generator, and he assumed that the glass jar would prevent the electricity from escaping. While holding the glass jar in one hand, he accidentally touched the nail and received a significant shock, proving that electricity was indeed stored inside the jar.

Von Kleist experiments were not well known and around the same time another man experimenting with electricity named Peter van Musschenbroek, of Leyden, Netherlands, also stumbled upon the same invention. Musschenbroek’s device was much like von Kleist’s jar. It consisted of a glass jar filled with water that contained a metal rod through a cork sealing the top of the jar. The outside of the jar was coated with a metal foil. When an electric charge was applied to the metal rod it was found that electricity could be stored in the jar. Unfortunately for the person touching the metal rod, a significant shock was received. As Musschenbroek recorded what happened when he first touched the rod:

Suddenly I received in my right hand a shock of such violence that my whole body was shaken as by a lightning stroke…. I believed that I was done for.

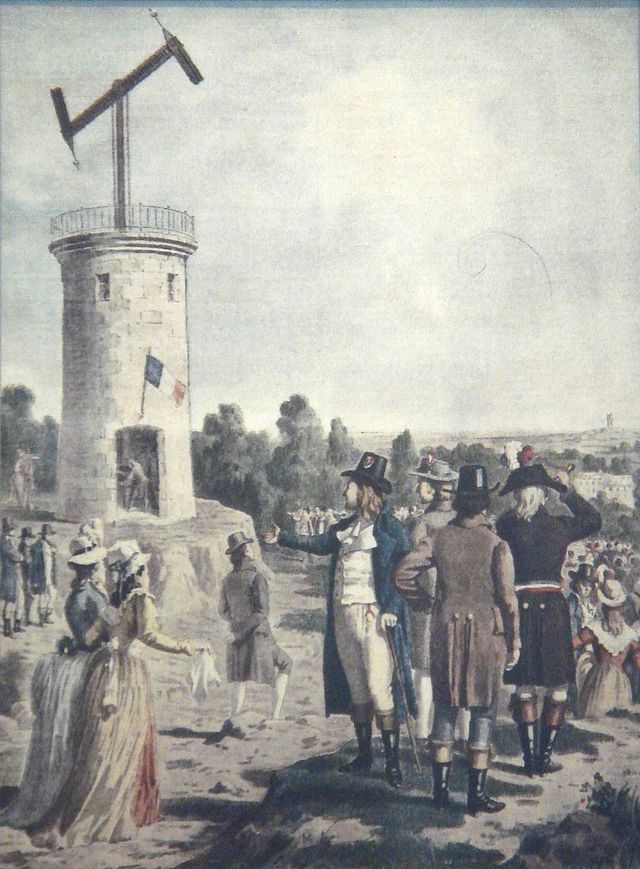

It didn’t take long until Musschenbroek’s Leyden jar being used and improved by others. In 1746, the following year, the English physician William Watson improved the jars storage capacity by coating both the inside and outside with metal foil. Also that same year, the French physicist Jean-Antoine Nollet discharged a Leyden jar in front of the French King Louis XV. During the demonstration, Nollet arranged a circle of 180 Royal Guards, each holding hands and he passed the charge from the Leyden jar through the circle. The shock was felt almost instantaneously by all members of the Royal Guard, to the delight of the King and his court. Demonstrations such as this brought widespread attention to the exciting new field of electricity.

Impact and Legacy of the Leyden Jar

Prior to the invention of the Leyden jar, electricity could only be observed and experimented at the moment it was created. The Leyden jar changed this by allowing scientists to store electrical energy and use it when needed. Researchers could now conduct various experiments related to electric discharge, conductivity, and other electrical phenomena. As an added bonus, the jar was easily transported, especially compared to the electrostatic generators of the day. The jars could be linked together to provide additional storage capacity. These abilities contributed to the growth of knowledge in the field of electrical science.

For one example, the American scientist and statesman Benjamin Franklin famously used the Leyden jar during his kite experiment in 1752. In that experiment, Franklin flew a kite during a lightning storm in an attempt to prove that lightning was a form of electricity. He attached a metal key to the end of the kite, and the key was then connected to a Leyden jar. Despite some popular accounts of the experiment, lightning likely never struck the kite directly or else Franklin would have been killed. However, he was able to observe that the Leyden jar was being charged, thus proving the electric nature of lightning.

The Leyden jar is also considered the first electrical capacitor, which today is a fundamental component in modern electric circuits. The invention of the Leyden jar laid the groundwork for the development of more sophisticated capacitors. While they briefly feel out of use after the invention of the battery, the basic idea of the Leyden jar capacitor found a renewed use at the end of the 19th century in modern electronic devices, albeit in a much smaller form.

Overall, the Leyden jar played a pivotal role in the early explanation and understanding of electricity. Its impact can be seen in the subsequent development of electrical technology and science.

Continue reading more about the exciting history of science!