Quantum mechanics is a paradigm shifting theory of physics that describes nature at the smallest scale. Understanding quantum mechanics requires a journey into the realm of the infinitesimally tiny, where the rules that govern our everyday reality no longer apply. The theory was developed gradually in the early part of the 20th century.

The Birth of Quantum Mechanics

The field of quantum mechanics began emerging very early on in the 20th century as a revolutionary framework for understanding the behavior of particles at the atomic and subatomic levels. The theory was formed from the observations and experiments of a handful of scientists of that period. As the 19th century was coming to a close classical physics was reaching its limits. New phenomena were being observed that it couldn’t explain. Quantum mechanics first entered into mainstream scientific thought in 1900 when Max Planck used quantized properties in his attempt to solve the black-body radiation problem. Plank introduced the concept of quantization, proposing that energy is emitted or absorbed in discrete units called quanta. It was initially regarded as a mathematical trick but later proved to be a fundamental aspect of nature.

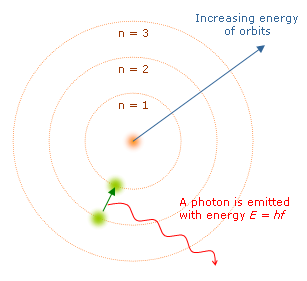

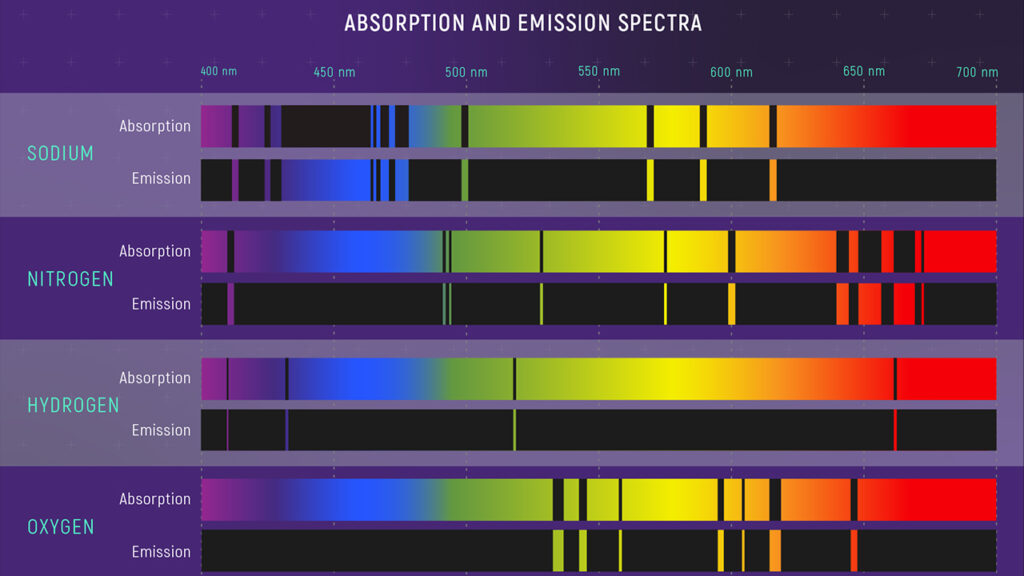

Five years later Albert Einstein offered a quantum-based theory to describe the photoelectric effect, earning Einstein the Nobel Prize in Physics in 1921. The next major leap came from Niels Bohr in 1913. One of the problems that puzzled physicists of the day was according to the current electrodynamic theory the orbiting electrons should run out of energy fairly quickly, almost right away, and crash into the nucleus. Bohr’s solution was to propose a model of the atom where electrons orbited the nucleus in definite energy levels or ‘shells’. In this new theory, electrons moving between orbits would move instantaneously between one orbit and another. They would not travel in the space between the orbits, an idea that became known as a quantum leap. Bohr published his work in a paper called On the Constitutions of Atoms and Molecules, and for this unique insight he won the Nobel Prize in Physics in 1922.

With these discoveries and others, quantum mechanics became a revolutionary field in physics. It also became one of the strangest fields in science to study and attempt to understand. It happens that things on the subatomic level don’t behave like anything we are used to in our everyday experience. Because of this strangeness some physicists did not like quantum mechanics very much, including Albert Einstein. Despite its strangeness and messiness, quantum mechanics is known for having a high degree of accuracy in its predictions. In the decades to follow quantum mechanics was combined with special relativity to form quantum field theory, quantum electrodynamics, and the standard model.

Foundational Principles of Quantum Mechanics

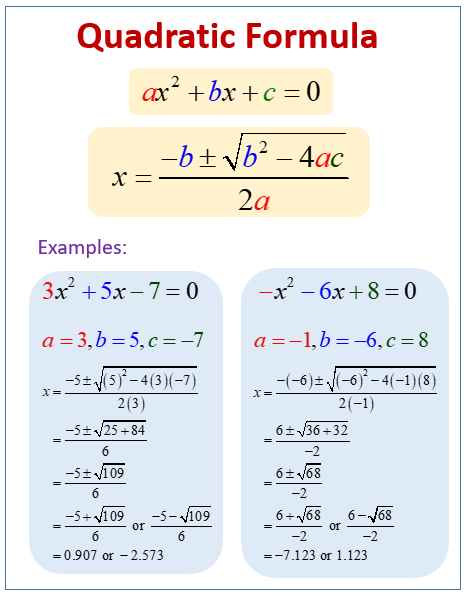

The main principle of quantum mechanics is that energy is emitted in discrete packets, called quanta. This differs from classical physics where all values were thought possible and the flow was continuous. Other attributes of quantum mechanics include:

- Uncertainty Principle: this states that certain pairs of physical properties, such as position and momentum, cannot be simultaneously known with absolute precision. It was first formulated by Werner Heisenberg in 1927 and arises from the wave-like nature of particles at the quantum level.

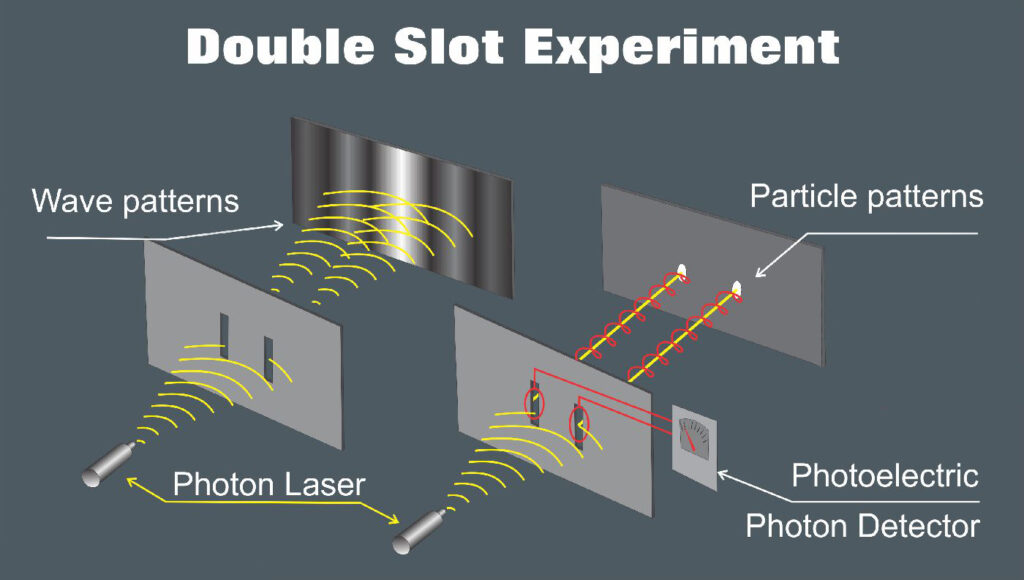

- Wave-Particle Duality: this describes the dual wave-like and particle-like behavior exhibited by objects at the quantum level. Electrons, for instance, can exhibit both particle like behavior (localized position and momentum) and wave-like behavior (interference and diffraction) under different experimental conditions.

- Quantum Entanglement: this is the curious phenomenon in quantum mechanics that occurs when a pair or group of particles becomes correlated in such a way that the quantum state of one particle is directly tied to the other particle. This means that changes to one particle instantly affects the other, regardless of the distance between them. The instantaneous correlation has been confirmed in numerous experiments with photons, electrons, and even individual molecules.

Together, these four principles provide solutions for physical problems that classical physics cannot account for. Quantum mechanics therefore provides a much more comprehensive framework than classical physics for understanding the behavior of matter and energy at the smallest scales. However, on some levels its development goes much further than that. It has transformed our understanding of matter and energy and upended our notions of predictability and determinism, with interesting philosophical implications. It is one of those areas in science where we are reminded of the power of human curiosity, ingenuity, and the perseverance in unraveling the mysteries of the universe.

Continue reading more about the exciting history of science!