Electromagnetism was discovered at the turn of the second decade of the 19th century. It is the branch of physical science where electricity and magnetism come together. Like many discoveries in the history of science it was not discovered in a single stroke of genius but rather by the additive work of many great thinkers over a vast stretch of time. The effects of this monumental discovery cannot be overstated, especially in today’s technological world. The principles of electromagnetism form the basis for nearly all electronic devices in use today – radar, radio, television, the internet, the personal computer, to name a few. We take these devices and the fact that they work for granted but understanding what events lead to these discovering principles they are made on can prove illuminating.

Initial Observations of Electricity and Magnetism

When the electric and magnetic forces were first identified they were considered to be separate forces acting independently of one another. The effects of these two forces were observed as far back as 800 BCE in Greece with the mining of lodestone, a mineral containing natural magnetic properties. Lodestone was later used in the production of the magnetic compass. The Chinese Han Dynasty first developed the compass in the second century BCE and this invention proved to have a profound impact on human civilization. Modern systematic scientific experiments only began in the middle of the 16th century with the work of William Gilbert. Over the centuries there were many more advancements in knowledge in electric and magnetic forces made by scientists such as Otto van Guericke, Pieter van Musschenbroek, Benjamin Franklin, Joseph Priestly, Alessandro Volta, Luigi Galvani, and many others.

Two Key Discoveries Begin the Process of Unification

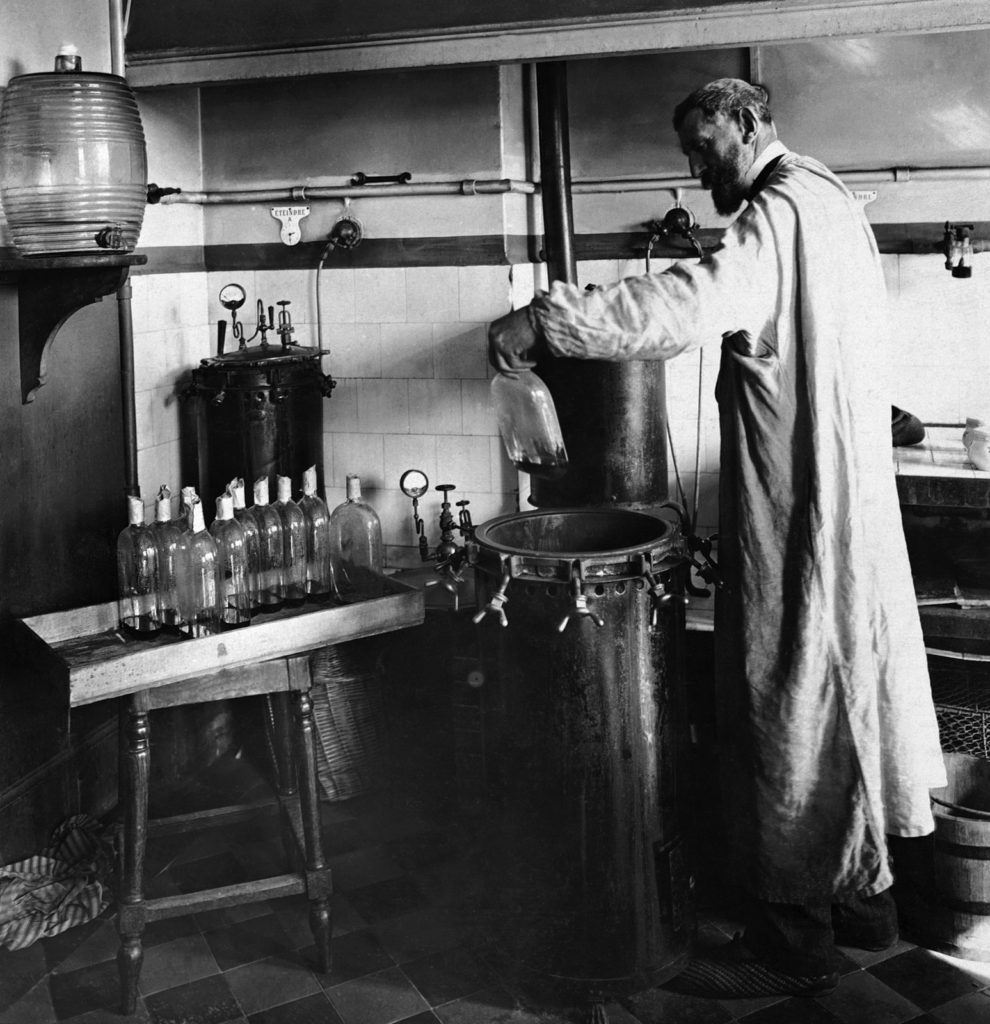

The first major hints that they were one force occurred during a lecture on April 21, 1820 when the Danish physicist Hans Christian Oersted noticed that his compass needle moved while in the presence of an electric current. Specifically, he found that if a wire carrying an electric current is placed parallel to the needle, it will turn at a right angle to the direction of the current. This observation prompted him to continue his investigations into the relationship. Several months after his lecture Oersted published a paper demonstrating that an electric current produces a circular magnetic field as it flows through a wire. Oersted’s paper demonstrated that electricity could produce magnetic effects, but this raised another question. Could the opposite happen? Could magnetism induce an electric current?

In 1831 Michael Faraday provided the answer. He showed this additional relationship between electricity and magnetism by demonstrating in a series of experiments that a moving magnetic field can produce an electric current. This process is known as electromagnetic induction. An American physicist, Joseph Henry, also independently discovered the same effect around the same time. However Faraday published his results first. Faraday’s and Oersted’s work showed that each force can act on the other, that the relationship works in both ways. The discovery of electromagnetism was now beginning to come into focus. In order to fully synthesis these two forces into one a mathematical model was needed.

A Mathematical Synthesis of Electricity and Magnetism

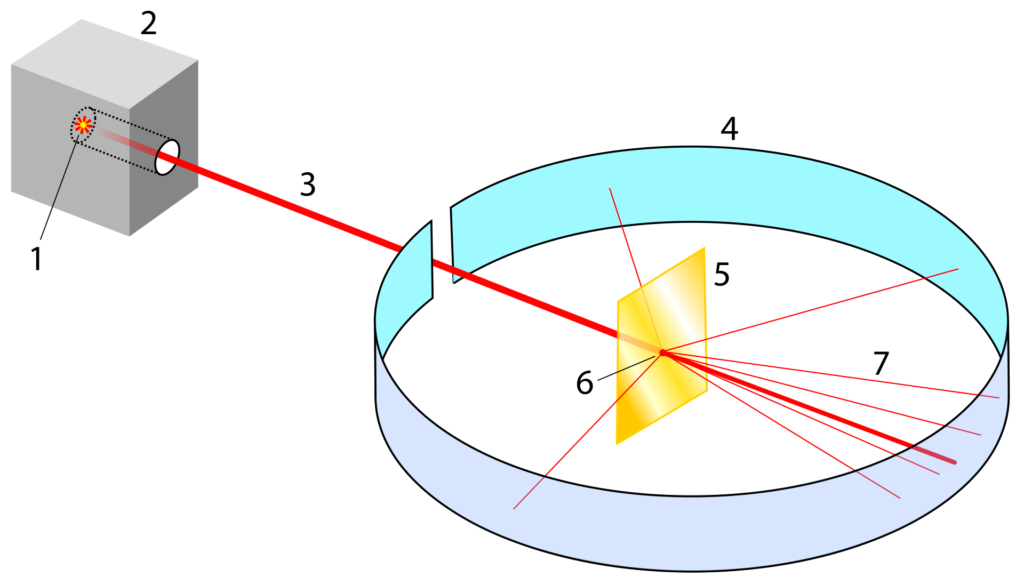

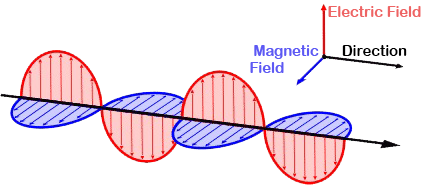

By 1862 James Clerk Maxwell had provided the necessary mathematical framework to unite the forces into his unifying Theory of Electromagnetism. Oersted’s and Faraday’s discoveries provided the basis for his theory. Indeed, Faraday’s law of induction became one of Maxwell’s four equations.

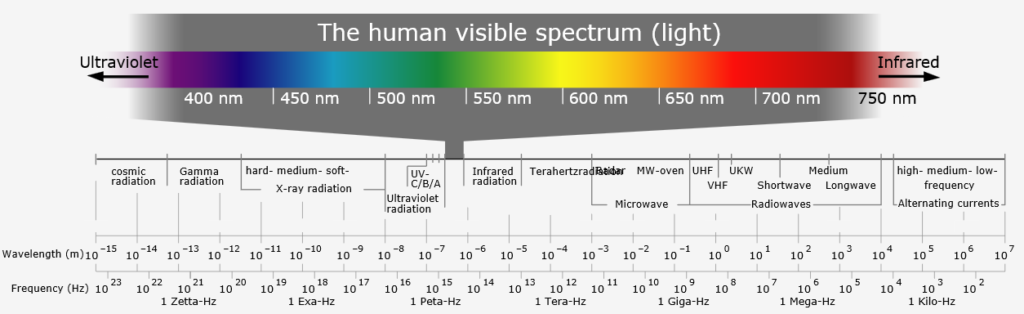

His theory also made some radical predictions for the time that were difficult for most to digest. It suggested that the speed of electromagnetic waves are the speed of light, a highly unlikely coincidence Maxwell thought. His equations also predicted the existence of other waves traveling at the speed of light. These idea’s received little traction in the scientific community at large until 1887 when the German physicist Heinrich Hertz discovered radio waves. The once radical predictions derived by Maxwell’s theory had been verified.

(Credit: Creative Commons)

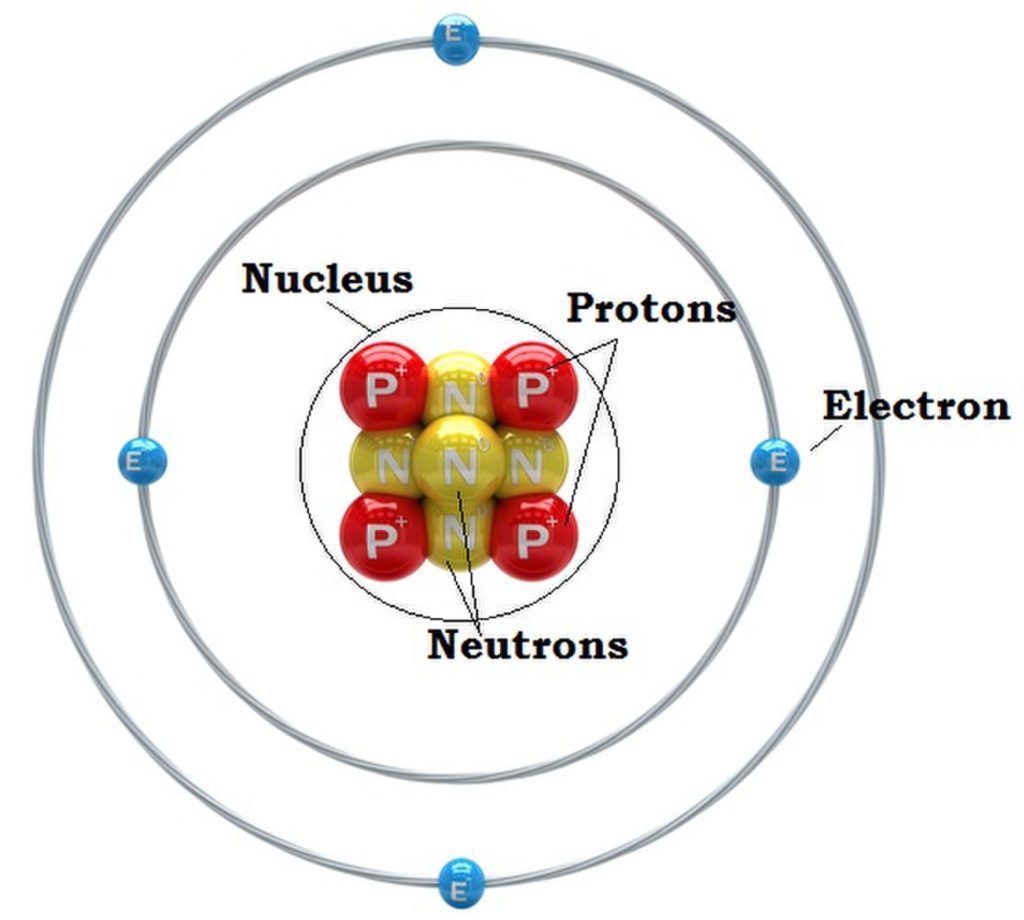

The discovery of electromagnetism changed the course of human civilization. Today it is understood as one of the four fundamental forces in nature, along with the gravitational force, the strong nuclear force and the weak nuclear force. By understanding and applying its principles human cultures have sparked a revolution in technology and electronics. The story of its discovery highlights the power of the scientific system of thought. Our modern world as we know it would not exist without this insight into this incredible force of nature.

Continue reading more about the exciting history of science!